You know what? I’m gonna disengage here. You’re not hearing what I am saying.

I’m just this guy, you know?

You know what? I’m gonna disengage here. You’re not hearing what I am saying.

#### MAXLOGAGE=24.0

Up to how many hours of queries should be imported from the database and logs? Values greater than the hard-coded maximum of 24h need a locally compiled `FTL` with a changed compile-time value.

I assume this is the setting you are suggesting can extend the query count period. It still will only give you the last N hours’ worth of queries, which is not what OP asked. I gather OP wants to see the cumulative total of blocked queries over all time, and I doubt the FTL database tracks the data in a usable way to arrive at that number.

Ah, well if you know differently then please do share with the rest of us? I think the phrasing in my post makes it pretty clear I was open to being corrected.

So, like a running sum? No, I don’t think so, not in Pi-hole at least.

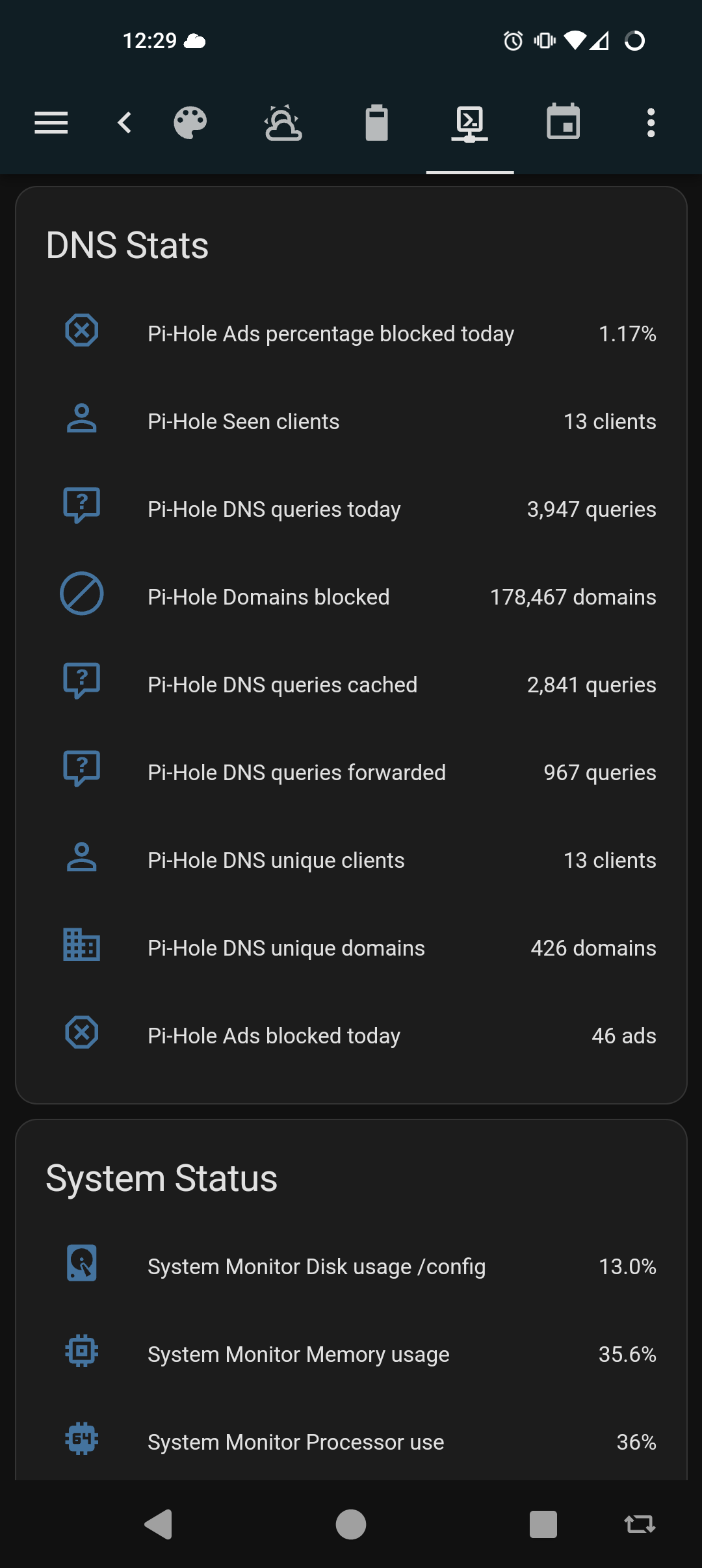

Pi-hole does have an API you could scrape, though. A Prometheus stack could track it and present a dashboard that shows the summation you want. There are other stats you could pull as well. This is a quick sample of what my home assistant integration sees

That counter, I believe, for the last 24 hours. It will fluctuate up and down across your active daily periods

Unless I misunderstand your question, draw.io can be downloaded as a standalone Linux application and run locally.

Likewise, the Xfig package should he available in most Linux repos. It’s old, but good enough for a quick sketch.

edit: aha. My mistake. My eyes slid over ‘open source’ in the title*, and even still I hadn’t realized it was an Apache license.

* Whaaat, it was pre-coffee? Let the purest among us cast the first stone.

It’s possible I’m mistaken on Flatpack vs. Snap. I don’t use either of them, myself.

Functionally they’re no different. LMDE draws its packages from Debian (probably stable) repos while mainline Mint draws from Ubuntu’s. So yes, Mint will have overall newer packages than LMDE but it’s generally rare for that to affect your ability to get work done unless some new feature you were waiting for gets introduced.

Ubuntu is the Enterprise fork of Debian backed by Canonical, and as such have contributed some controversy into the ecosystem.

Ubuntu leverages Snap packages which are considered ‘bloaty’ and ‘slow’ by a plurality of people with opinions on these matters. They work. Mint incorporates the Snap store into their package management. You might just need to turn it on in the settings.

With mainline Mint you get new base OS packages with Ububtu’s release cycle, and the Snap store.

In the case of LMDE then, you can run a stable base OS on Debian’s rock-solid foundation, their release cycle, and still get your fresh software from the Snap store.

IMO, they’re the same for like 85% of use cases. I find I end up going to extra measures to disable certain Ubuntu-isms on my own systems that run it, effectively reverting it to Debian by another name.

As a student and occasional gamer, the trade off is having a stable base for your learning needs, and still be able to get the latest user desktop apps from Snap.

Linux Mint Debian Edition (LMDE) is a solid pick. All the perks and integration of Mint, without Ubuntu.

…Ubuntu which, yes, is a Debian downstream. People have their opinions on it. It works. It has its nuisances, but it works.

Oh, shoot. If you’re gonna roll your own then that’s probably the better play because at least then the firmware won’t be all locked down and you can pick known-compatible parts. Get it with no OS and sort it out later if you need to.

It’s easy enough to buy a Windows license key later on if you need it. The school night even make it available you at a student discount. Boot it from a USB drive, even.

Heck, I ran Linux on my college computers back in the 90s. It was just a thing you did. Ah, memories…

Anyhoo, it largely depends on the school but for most intents and purposes Windows, Mac and Linux are interoperable. By that I mean they can generally open, manipulate and share all of the common document formats natively, with some minor caveats.

Many schools also have access to Microsoft O365, which makes the MS Office online suite available as well. All you really need to use that is a web browser.

I work in an office environment these days where Windows, Mac and Linux are all well supported and are in broad use. I use Linux (Debian) exclusively, my one coworker is all-windows and a third is all-mac. Our boss uses Windows on the desktop, but also uses a Macbook. We are able to collaborate and exchange data without many problems.

I would say the two main challenges you’re liable to face will be when Word files include forms or other uncommon formatting structures. LibreOffice is generally able to deal with them, but may mangle some fonts & formatting. Its not common but it does happen.

The other main challenge could be required courseware-- specialized software used in a curriculum for teaching-- and proctor software for when you’re taking exams online. Those might require Windows or Mac

If it ever comes up, Windows will run in a Virtual Machine (VM) just fine. VirtualBox by Oracle is generally free for individual use, and is relatively easy to start up. Your laptop will probably come with Windows pre-installed, so you could just nuke it, install Linux, install VirtualBox, and then install Windows as a VM using the license that came with your laptop. You’d need to ask an academic advisor at the school if that’s acceptable for whatever proctor software they use.

I recommend against dual-booting a Windows environment if you can avoid it. Linux & Windows are uneasy roommates, and will occasionally wipe out the other’s boot loader. It’s not terribly difficult to recover, but there is a risk that could (will) happen at the WORST possible moment. However, it might be unavoidable if they use proctor software that requires windows on bare metal. Again, you’d have to ask the school.

Good luck!

I’d meant smaller as in feature set, but I take your point.

IMO, using Gecko instead of WebView-- which is based on Chromium-- is a plus. Chrome itself gets some bad press for being invasiveness and anti-adblockiing. Yes, Mozilla Foundation has been getting too cozy with their advertiser handling and telemetry practices, but you can still disable those. They have a lot more credibility with me still than Google & Chrome/Chromium, who are first and foremost an advertising platform.

You said not Firefox, but have you considered Firefox Focus? Its a much smaller app with the privacy features all enabled by default.

Secure file transfers frequently trade off some performance for their crypto. You can’t have it both ways. (Well, you can but you’d need hardware crypto offload or end to end MACSEC, where both are more exotic use cases)

rsync is basically a copy command with a lot of knobs and stream optimization. It also happens to be able to invoke SSH to pipeline encrypted data over the network at the cost of using ssh for encrypting the stream.

Your other two options are faster because of write-behind caching in to protocol and transfer in the clear-- you don’t bog down the stream with crypto overhead, but you’re also exposing your payload

File managers are probably the slowest of your options because they’re a feature of the DE, and there are more layers of calls between your client and the data stream. Plus, it’s probably leveraging one of NFS, Samba or SSHFS anyway.

I believe “rsync -e ssh” is going to be your best over all case for secure, fast, and xattrs. SCP might be a close second. SSHFS is a userland application, and might suffer some penalties for it

I do this. A Debian Live image and an encrypted LVM for home. Came in handy a few times for the odd system rescue

Unbound will take updates via API. You could either write exit hooks on your clients, or use the “on commit” event on isc-dhcp-server to construct parameters and execute a script when a new lease is handed out.

I used to selfhost more, but honestly it started to feel like a job, and it was getting exhausting (maybe also irritating) to keep up with patches & updates across all of my services. I made decisions about risks to compromise and data loss from breaches and system failures. In the end, In decided my time was more valuable so now I pay someone to incur those risks for me.

For my outward facing stuff, I used to selfhost my own DNS domains, email + IMAP, web services, and an XMPP service for friends and family. Most of that I’ve moved off to paid private hosting. Now I maintain my DNS through Porkbun, email through MXroute, and we use Signal instead of XMPP. I still host and manage my own websites but am considering moving to a ghost.org account, or perhaps just host my blogs on a droplet at DO. My needs are modest and it’s all just personal stuff. I learned what I wanted, and I’m content to be someone else’s customer now.

At home, I still maintain my custom router/firewall services, Unifi wireless controller, Pihole + unbound recursive resolver, Wireguard, Jellyfin, homeassistant, Frigate NVR, and a couple of ADS-B feeders. Since it’s all on my home LAN and for my and my wife’s personal use, I can afford to let things be down a day or two til I get around to fixing it.

Still need to do better on my backup strategies, but it’s getting there.

I use Porkbuns API. It’s not sophisticated, but it works.

Gandi changed their TOS and price structure last year, so I ported everything over to Porkbun for a small savings, but mostly as a big middle finger to Gandi.

If you’re gonna get banged that kind of cash for functions you’re already using, you may as well look at better registrars, and get better value for your spend.

Shop around.

No worries, the other poster was just wasn’t being helpful. And/or doesn’t understand statistics & databases, but I don’t care to speculate on that or to waste more of my time on them.

The setting above maxes out at 24h in stock builds, but can be extended beyond that if you are willing to recompile the FTL database with different parameters to allow for a deeper look back window for your query log. Even at that point, a second database setting farther down that page sets the max age of all query logs to 1y, so at best you’d get a running tally of up to a year. This would probably at the expense of performance for dashboard page loads since the number is probably computed at page load. The live DB call is intended for relatively short windows vs database lifetime.

If you want an all-time count, you’ll have to track it off box because FTL doesn’t provide an all-time metric, or deep enough data persistence. I was just offering up a methodology that could be an interesting and beneficial project for others with similar needs.

Hey, this was fun. See you around.