To actually answer your question, you need some kind of job scheduling service that manages the whole operation. Whether that’s SSM or Ansible or something else. With Ansible, you can set a parallel parameter that will say that you only update 3 or so at a time until they are all done. If one of those upgrades fails, then it will abort the process. There’s a parameter to make it die if any host fails, but I don’t recall it right now.

- 0 Posts

- 35 Comments

43·4 months ago

43·4 months agoThere used to be a saying that Intel had a vault where they paid out the next ten years of CPU tech, so when they invented something new they put it there so they could make profits and control the advancement.

Now, I’m not sure which thing they got wrong, but if it was true, I think Intel was probably caught off guard by all the speculative execution security issues and the GPU revolution (blockchain and AI).

2·8 months ago

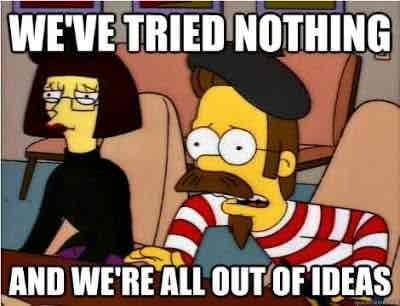

2·8 months agoHow have I never seen that before. It’s perfection

14·8 months ago

14·8 months ago

Don’t you go and reinstall, learn how to fix this

1·8 months ago

1·8 months agoWhich crypto network are you talking about that can be operated for free? PoW is expensive and wasteful, and PoS is pretty much back to a regular database again.

At the end of the day here, this is a simple transaction ledger that doesn’t need to be turned into crypto, it just needs a party interested in moving the money around in these micropayments with minimal fees.

3·9 months ago

3·9 months agoAdobe is actually one of the leading actors in this field, take a look at the Content Authenticity Initiative (https://contentauthenticity.org/)

Like the other person said, it’s based on cryptographic hashing and signing. Basically the standard would embed metadata into the image.

1·10 months ago

1·10 months agoRealistically, yes. But it’s a phrase and it’s important that they start doing that first. Maybe it’s their intention to do it publicly.

Also, sure, but a Wireguard installation is going to be much more secure than a Nextcloud that you aren’t sure if it’s configured correctly. And Tailscale doubly so.

11·10 months ago

11·10 months agoPlease set up Tailscale or a Wireguard VPN before you start forwarding ports on your router.

Your configuration as you have described it so far is setting yourself up for a world of hurt, in that you are going to be a target for hackers from literally the entire world.

2·10 months ago

2·10 months agoThere is a lot of complexity and overhead involved in either system. But, the benefits of containerizing and using Kubernetes allow you to standardize a lot of other things with your applications. With Kubernetes, you can standardize your central logging, network monitoring, and much more. And from the developers perspective, they usually don’t even want to deal with VMs. You can run something Docker Desktop or Rancher Desktop on the developer system and that allows them to dev against a real, compliant k8s distro. Kubernetes is also explicitly declarative, something that OpenStack was having trouble being.

So there are two swim lanes, as I see it: places that need to use VMs because they are using commercial software, which may or may not explicitly support OpenStack, and companies trying to support developers in which case the developers probably want a system that affords a faster path to production while meeting compliance requirements. OpenStack offered a path towards that later case, but Kubernetes came in and created an even better path.

PS: I didn’t really answer your question”capable” question though. Technically, you can run a kubernetes cluster on top of OpenStack, so by definition Kubernetes offers a subset of the capabilities of OpenStack. But, it encapsulates the best subset for deploying and managing modern applications. Go look at some demos of ArgoCD, for example. Go look at Cilium and Tetragon for network and workload monitoring. Look at what Grafana and Loki are doing for logging/monitoring/instrumentation.

Because OpenStack lets you deploy nearly anything (and believe me, I was slinging OVAs for anything back in the day) you will never get to that level of standardization of workloads that allows you to do those kind of things. By limiting what the platform can do, you can build really robust tooling around the things you need to do.

2·10 months ago

2·10 months agoI used to be a certified OpenStack Administrator and I’ll say that K8s has eaten its lunch in many companies and in mindshare.

But if you do it, look at triple-o instead of installing from docs.

I wish I could fully endorse Escalidraw, but it only partially works in self-hosted mode. For a single user it’s fine, but not much works beyond that.

2·11 months ago

2·11 months agoThis article was contemporaneously posted with the actual announcement, but I agree that I don’t know why it was posted here 6 years later.

7·11 months ago

7·11 months agoI think it’s worth saying that the head unit failing in this scenario is very disruptive for two reasons:

First and foremost, the purpose of this journey in this car is to review the car. So if the head unit craps out, and he doesn’t make every effort to reboot it, and he mentions it in the review, he loses a lot of credibility from the users and industry folks. Could you imagine a review for a computer where it crashes or turns off, and the reviewer just says “welp, that’s all folks”?

My second point is that he is navigating in an unfamiliar place to a charger for the car. If you’re coming from Tesla or AA/CarPlay, this is something you expect to work flawlessly. And it’s part of the review that’s worth discussing whether or not it works.

In my opinion, even if he 100% knew where he was going, his behaviors are justified for a review.

3·11 months ago

3·11 months agoI’m just saying what I saw over at https://old.reddit.com/r/ledgerwallet/search?q=Lost+my+btc+upgrade&restrict_sr=on&sort=relevance&t=all

Obviously I haven’t checked up on all of those, but it does seem to happen a bit. I’m not sure how frequently would be considered okay here, but that’s the sort of thing that shouldn’t happen.

13·11 months ago

13·11 months agoWith crypto, you hold your own money

You own a cryptographic key that a bunch of strangers have decided points to a spot on a ledger. These strangers have no legal connection to you, but things have been working out pretty well so far because your incentives align.

As a bunch of Ledger owners are finding out, there are reasons for FDIC insurance of banks and that reason is so that people don’t have to be exposed to the dangers of storing all their money under their mattresses. Everyone recommends getting your crypto into a hardwallet, but what happens when a Ledger update bricks it? Or the company decides to backdoor it to escrow your “private” keys? And what can you do with those hardwallet funds besides HODL? Can you imagine if every time you wanted to spend part of your dirty fiat savings, you had to expose all of it to danger to do so?

23·11 months ago

23·11 months agoBecause sometimes even criminals need to buy things that aren’t illegal, I guess. And the legitimate people who have those things don’t want to play games dealing with fake internet money.

If I want to buy a jetski, the place I buy it from isn’t going to take crypto because the people that sell the parts for it don’t take crypto and the people who build it can’t pay for food in crypto.

Crypto is only useful for rug pull scams, money laundering, and black-market transactions. It’s real innovation is undoing centuries of banking regulations so that people can learn the hard way why all those regulations exist.

32·11 months ago

32·11 months agoThe transparency is the feature that makes it great. I can buy drugs or whatever, and exchange you buy an NFT from me of equal value. Now when the bank comes and says “where did this >$15k transaction come from?” I can point to the blockchain and say that I sold my fancy monkey pic.

This has been a thing in the physical art world for a while, https://complyadvantage.com/insights/art-money-laundering/, this just made it easier.

You’re on the right track here. Longhorn kind of makes RAID irrelevant, but only for data stored in Longhorn. So anything on the host disk and not a PV is at risk. I tend to use MicroOS and k3s, so I’m okay with the risk, but it’s worth considering.

For replicas, I wouldn’t jump straight to 3 and ignore 2. A lot of distributed storage systems use 3 so that they can resolve the “split brain” problem. Basically, if half the nodes can’t talk to each other, the side with quorum (2 of 3) knows that it can keep going while the side with 1 of 3 knows to stop accepting writes it can’t replicate. But Longhorn already does this in a Kubernetes native way. So it can get away with replica 2 because only one of the replicas will get the lease from the kube-api.

Longhorn is basically just acting like a fancy NFS mount in this configuration. It’s a really fancy NFS mount that will work well with kubernetes, for things like PVC resizing and snapshots, but longhorn isn’t really stretching its legs in this scenario.

I’d say leave it, because it’s already setup. And someday you might add more (non-RAID) disks to those other nodes, in which case you can set Longhorn to replicas=2 and get some better availability.

That would be great. Just a bit that sends an email from a different innocuous sounding Gmail every month with a generic problem like “app crashes on <random device>” to see if there is a response. If you miss 3 in a row, you’re out