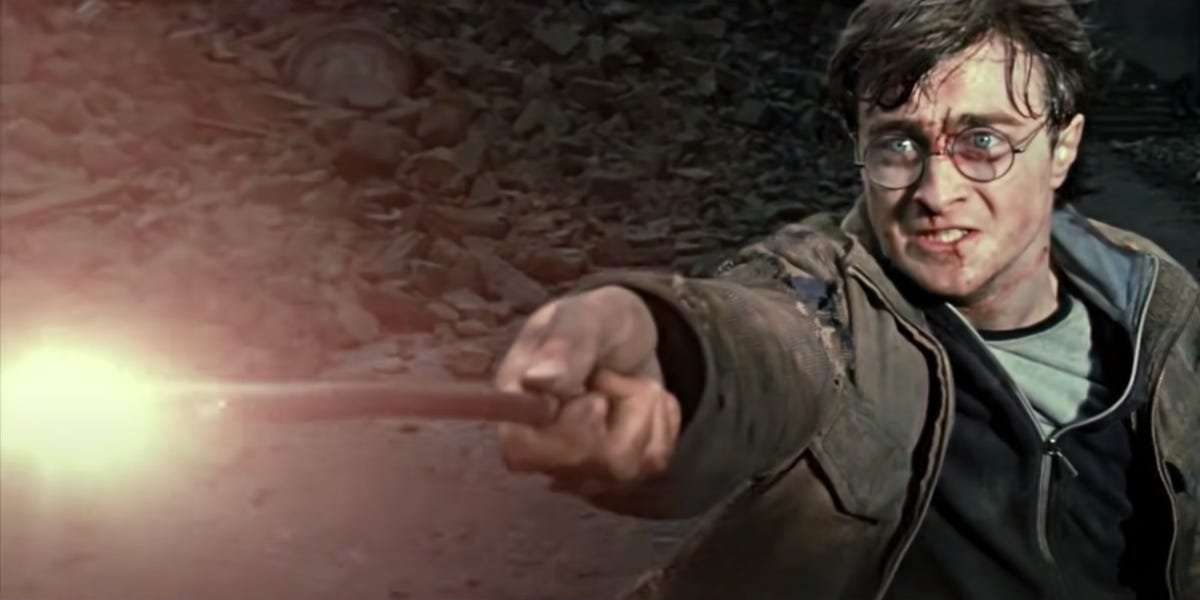

OpenAI now tries to hide that ChatGPT was trained on copyrighted books, including J.K. Rowling’s Harry Potter series::A new research paper laid out ways in which AI developers should try and avoid showing LLMs have been trained on copyrighted material.

Let’s not pretend that LLMs are like people where you’d read a bunch of books and draw inspiration from them. An LLM does not think nor does it have an actual creative process like we do. It should still be a breach of copyright.

… you’re getting into philosophical territory here. The plain fact is that LLMs generate cohesive text that is original and doesn’t occur in their training sets, and it’s very hard if not impossible to get them to quote back copyrighted source material to you verbatim. Whether you want to call that “creativity” or not is up to you, but it certainly seems to disqualify the notion that LLMs commit copyright infringement.

This topic is fascinating.

I really do think i understand both sides here and want to find the hard line that seperates man from machine.

But it feels, to me, that some philosophical discussion may be required. Art is not something that is just manufactured. “Created” is the word to use without quotation marks. Or maybe not, i don’t know…

I wasn’t referring to whether the LLM commits copyright infringement when creating a text (though that’s an interesting topic as well), but rather the act of feeding it the texts. My point was that it is not like us in a sense that we read and draw inspiration from it. It’s just taking texts and digesting them. And also, from a privacy standpoint, I feel kind of disgusted at the thought of LLMs having used comments such as these ones (not exactly these, but you get it), for this purpose as well, without any sort of permission on our part.

That’s mainly my issue, the fact that they have done so the usual capitalistic way: it’s easier to ask for forgiveness than to ask for permission.

I think you’re putting too much faith in humans here. As best we can tell the only difference between how we compute and what these models do is scale and complexity. Your brain often lies to you and makes up reasoning behind your actions after the fact. We’re just complex networks doing math.

Unless you are going to argue the act of feeding it the texts is distributing the original text or doing some kind of public performance of the text, I don’t see how.

*could