DEF CON Infosec super-band the Cult of the Dead Cow has released Veilid (pronounced vay-lid), an open source project applications can use to connect up clients and transfer information in a peer-to-peer decentralized manner.

The idea being here that apps – mobile, desktop, web, and headless – can find and talk to each other across the internet privately and securely without having to go through centralized and often corporate-owned systems. Veilid provides code for app developers to drop into their software so that their clients can join and communicate in a peer-to-peer community.

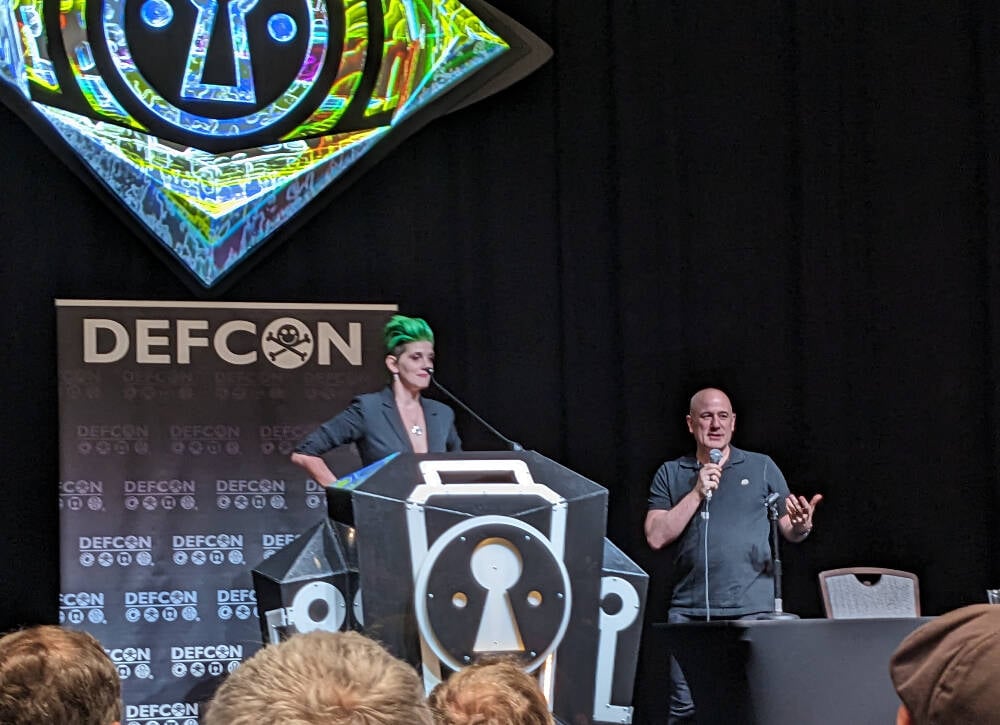

In a DEF CON presentation today, Katelyn “medus4” Bowden and Christien “DilDog” Rioux ran through the technical details of the project, which has apparently taken three years to develop.

The system, written primarily in Rust with some Dart and Python, takes aspects of the Tor anonymizing service and the peer-to-peer InterPlanetary File System (IPFS). If an app on one device connects to an app on another via Veilid, it shouldn’t be possible for either client to know the other’s IP address or location from that connectivity, which is good for privacy, for instance. The app makers can’t get that info, either.

Veilid’s design is documented here, and its source code is here, available under the Mozilla Public License Version 2.0.

“IPFS was not designed with privacy in mind,” Rioux told the DEF CON crowd. “Tor was, but it wasn’t built with performance in mind. And when the NSA runs 100 [Tor] exit nodes, it can fail.”

Unlike Tor, Veilid doesn’t run exit nodes. Each node in the Veilid network is equal, and if the NSA wanted to snoop on Veilid users like it does on Tor users, the Feds would have to monitor the entire network, which hopefully won’t be feasible, even for the No Such Agency. Rioux described it as “like Tor and IPFS had sex and produced this thing.”

“The possibilities here are endless,” added Bowden. “All apps are equal, we’re only as strong as the weakest node and every node is equal. We hope everyone will build on it.”

Each copy of an app using the core Veilid library acts as a network node, it can communicate with other nodes, and uses a 256-bit public key as an ID number. There are no special nodes, and there’s no single point of failure. The project supports Linux, macOS, Windows, Android, iOS, and web apps.

Veilid can talk over UDP and TCP, and connections are authenticated, timestamped, strongly end-to-end encrypted, and digitally signed to prevent eavesdropping, tampering, and impersonation. The cryptography involved has been dubbed VLD0, and uses established algorithms since the project didn’t want to risk introducing weaknesses from “rolling its own,” Rioux said.

This means XChaCha20-Poly1305 for encryption, Elliptic curve25519 for public-private-key authentication and signing, x25519 for DH key exchange, BLAKE3 for cryptographic hashing, and Argon2 for password hash generation. These could be switched out for stronger mechanisms if necessary in future.

Files written to local storage by Veilid are fully encrypted, and encrypted table store APIs are available for developers. Keys for encrypting device data can be password protected.

“The system means there’s no IP address, no tracking, no data collection, and no tracking – that’s the biggest way that people are monetizing your internet use,” Bowden said.

“Billionaires are trying to monetize those connections, and a lot of people are falling for that. We have to make sure this is available,” Bowden continued. The hope is that applications will include Veilid and use it to communicate, so that users can benefit from the network without knowing all the above technical stuff: it should just work for them.

To demonstrate the capabilities of the system, the team built a Veilid-based secure instant-messaging app along the lines of Signal called VeilidChat, using the Flutter framework. Many more apps are needed.

If it takes off in a big way, Veilid could put a big hole in the surveillance capitalism economy. It’s been tried before with mixed or poor results, though the Cult has a reputation for getting stuff done right. ®

Impressive design.

Implicit in the description is the weakness would be monitoring the entire network, somehow, if possible.

The more apps and nodes that run Veilid, the more private the system.

I look forward to adoption being vast and wide. The bigger the better.

But queue the 'but we need to protect the children ’ crowd and outlaw these protocols.

deleted by creator

It’s simple, really : if you have a built-in back door to prevent child porn circulation, then you can use it for anything else, and it WILL eventually be used in other ways.

What I don’t understand about these projects is why can’t we both have them and protect the children (child porn, child trafficking, etc.)?

The reason is that the “protect the children” thing is and always has been a bad faith excuse to expand or establish control over others. That’s not to say that places like TOR don’t have a problem with CSAM but if that were the actual target, it would be addressed in the proposed laws and vigorously pursued. It never is.

Protecting children is always, at most, a token gesture in these laws, which exand censorship and surveillance of the population, while demonstrating complete disregard for harms and unnecessary risks that they introduce, while generally also exempting those in power from being impacted.

deleted by creator

Indeed.

For a good example of how “extra policing and security” can go too far, go no further than the TSA and all the “terrorism” they protect us from

If that’s what you have to tell yourself, fine. But at least be intellectually honest here and admit you’re simply willing to condone child abuse because you think Google or whatever looking at your texts is more egregious

Nah. I don’t condone it nor do I think its perpetrators should be able to escape justice, regardless of how wealthy or politically connected they are. They deserve the same as slavers for the lifelong harms that they cause their victims. If you’re intellectually honest, you’ll admit that giving unfettered access to personal information and habits to organizations with poor track records of keeping such access safe is a stupid idea.

ETA: While I don’t like that megacorps have my information, that ship has sailed. What I see as the bigger problem is when the backdoor access and data is stolen by criminal organizations or abused by state actors. Think the increasing rate of identity theft, credit card fraud, and ransomware attacks is bad now? You ain’t seen nothing yet. Just wait until a bad actor has access to everyone’s devices and activity data then starts selling it to whoever is interested (burglers knowing when victims will be absent, state actors not needing to even employ a honeypot for blackmail, properties “mysteriously” being signed over to megacorps, groups committing genocide and censoring and mention on electronic media, etc.). Might sound like an exaggerated enumeration of risks but it’s quite the opposite. If you have any understanding of how online services work, you know that encryption and security are the only reason that the Internet can be used for anything beyond sharing documents.

ETA2: Also, if you’ve paid even the slightest bit of attention to history, you’ll know how much “think of the children” was abused in the 20th century alone to try to prevent access to “black music” (jazz, blues), education, art, rock music, knowledge of sex, fantasy novels, roleplaying games, programs preventing child abuse, etc.

This is empty virtue signalling and false equivalency. Just say it. You think there’s a unknowable volume of real life, actually happening, enablement of the pain, suffering, and death of men, women, and children you’re willing to accept so Google or the government or whatever can’t read your irrelevant and unimportant conversations

https://en.m.wikipedia.org/wiki/2017_Equifax_data_breach

https://www.bbc.com/news/uk-northern-ireland-66467164

An yes. We should absolutely trust large corporations and state actors to keep our information and backdoors safe, and protect our children by checks notes opening the them up to actions shown to increase suicide./s

Come on, mate. Accusing people of supporting child abuse to try to keep them from speaking out about terrible ideas and manipulative excuses that are put forth in bad faith doesn’t look good on you.

Ok,.cool? 70% of those don’t apply to this conversation at all.

Look, it’s clear you’re willing to twist yourself into a pretzel and play whataboutism because you refuse to acknowledge the uncomfortable truth. That’s fine, I get it.

Nearly every aspect of our society relies on the exploitation and suffering of others.

For the same reason, we don’t allow government cameras in every public and private bathroom, even though it could stop really shitty people doing really shitty things.

Humans demand personal privacy, and need avenues for that. The quite literal big brother is generally not felt to be something any society wants, even if it could illimate the shitty people doing really shitty things.

It’s not a tech problem. It’s a societal one.

deleted by creator

The fediverse is not private. It is so non-private, that I’d say it’s hovering somewhere on the opposite spectrum. That’s ok, because it was not built for privacy. It was built to democratise online spaces, and it does that very well.

Self regulation is possible on the fediverse because a semi central authority (instance admins) can choose to defederate from other semi central authorities (instance admins); this still doesn’t really silence anyone since they can form their own instance and do whatever they want in there.

In person to person chats, self regulation does exist - if you don’t like what someone says or does you just stop communicating or associating with them. If what they’re doing is immoral and illegal, you can report them yourself. What private communication is about is that someone disconnected to the conversation can’t come around snooping without the consent of anybody actually in the conversation.

I would argue it could be more efficient to protect children (and all victims) in our daily lives - show empathy towards others, and improve empathy in societies where necessary (yes, sadly, this is a lengthy process), to the point where no country will seem to be turning a blind eye towards abusers, and where people care & check on the kids they see in the neighborhood. This won’t eliminate all the abuse, but online policing of contents is only fighting the symptoms, so the “offline approach” seems preferable. And surprise - if people are vigilant offline, the excuse for global surveillance goes away & ugly corporate capitalistic assholes need to find a new excuse.

The way they caught that horrible serial abuser in Australia recently is a good example of a detective using localized skills to find the needle in the haystack and identify a blanket in an abuse video.

Actually protecting people? Showing empathy?! Who do you think we are, demoncrats?

It was never about the children or fighting terrorism, to get pedophiles or twart attacks you have to have people “on the ground”, not by snooping everything.

I think this is a great question, but I would ask it a little differently.

Is it possible for a p2p system to self police for things like cp?

Maybe no one knows how now. But maybe someone can figure it out eventually. Seems like a bit of a logical contradiction but I continue to be amazed at human creativity.

Yeah, they are contradictory concepts to an extent. Making an uncensorable and untraceable protocol means exactly that. Things like the Fediverse are not that and censorship can come through things like defederation and blocking.

That said, they exist on different layers. You could probably run a federated system on top of this protocol and still be able to filter out the illegal and offensive content. It doesn’t mean that content just disappears, it just means you don’t have to subject yourself to it.

Interesting, especially running a federated system on top of the new protocol.

If it were just anonymous content in a public setting you could use crowd-based morality to filter it. Any hash with a 75% down vote gets blacklisted and kind of thing. You have to account for bots and AI which may not be possible.

But once you put private into the mix, you lose the crowd you’d need to vote for morality. Now you’ve got cases where MGM, Sony and BMG hire people to infiltrate the networks and shut down any post they deem unfit.

Privacy is the difference between the dark web and the public torrent scene.

deleted by creator

Which AI is scanning your computer and how?

And why?

What I don’t understand about these projects is why can’t we both have them and protect the children

Think of this as closer to Signal than to a social media platform. It’s a protocol, so there’s no saying that you couldn’t build a social media site with it, bit for now the demo app that I saw today is just chat. The parties involved share public keys with each other, and from then on, everything is encrypted so that only those people in the chat can read it.

With that model, censorship is not really feasible. If you’re one of the perks in the conversation, you can say “guys, that’s gross, stop” or send screenshots to the cops or whatever, but that’s about it.

Ultimately, if the only way the Authorities have of acting against terrorism/pedophiles/etc is by infringing everyone in the county’s right to privacy, they’re doing a shit job and need to be replaced.

You really can’t have it both ways. It’s morally bankrupt to launch protocols that clearly will be used for abhorrent purposes and simply hand wave it away because you’re uncomfortable with the reality of the situation.

I think we all wish that weren’t the case, but it is.

Saying crap like it wouldn’t be a problem if law enforcement would just “git gud” makes you complicit

I agree that you can’t have it both ways; for everyone to have privacy, horrible people have to get privacy, and they will do horrible things with it.

The thing is, people doing horrible things are already incentivized to take precautions, and it’s not possible to uninvent cryptography. Making it more accessible helps the innocent more than it does the guilty.

Making it more accessible helps the innocent more than it does the guilty.

Source?

Common sense? Take the signal protocol. Theres more innocent people using it for whatever purpose than there are guilty people using it for whatever purpose. You can’t not develop or use a technology just because somebody else might use it for nefarious purposes. Bad people do bad things on the normal internet, does that mean we should start restricting internet usage because someone might do something bad? Of course not, thats just stupid. So why doesn’t the same hold true for encryption technologies? Sure someone with ill intentions is going to do things with it we don’t like, but the majority of users are just wanting to use said technology so governments and corporations don’t see what they are doing.

Or are we not entitled to privacy simply because some people use their privacy to harm others?

There’s literally no way you can back up any of these claims. It’s just what you want to be true.

All I want is for you to admit that you think protecting the “privacy” of people’s mundane text conversations is worth enabling Terrorism, Child Sexual Abuse, Human Trafficking, Organized Crime, etc etc etc

To be clear, I think people should have a basic expectation of privacy. But at what cost? Like we’ve established it’s impossible to have one without the other.

So if a predator locks a victim in a closet, does that make lockmakers morally reprehensible?

Locks don’t make predators untraceable

But they do offer an easy, quick and convienient way for a predator to contain their victim, much simpler than tying them down or holding them.

Gonna have to agree to disagree here: to my mind, taking away tools that allow people to evade the restrictions of unjust regimes because you buy into the “for the children” fear mongering makes you complicit in the rising totalitarian state.

Just because people use “for the children” in inappropriate scenarios to further an agenda has nothing to do with this discussion and you know it.

If you make a tool to essentially hide people’s activity online, you KNOW what it’s going to ultimately be used for.

…and you clearly think it’s worth the trade off. So no need to continue

That would require that users have access to other users’ traffic, compromising security. After all, there’s no reason the government or corporations couldn’t operate many ‘users’.

I love this.

We need more security, more control over our own activities.

To people who plead we give up our anonymity to catch burglars, we already did that and we got mass surveillance by state, nation and the private sector. Seems like the burglars are still out there though.

I’m working on a similarish protocol (up, working) basically IPFS but better ;-) anyone know where I could get some feedback or show it to people interested in those kind of things?

Cheers

Submitting and getting a talk about it accepted at DEF CON seems like a good way that worked here. Of course having name recognition like CDC going back to my childhood also helps :-)

Whoah yeah I’d love pulling something like that off, but I’m not sure I’m ready for it, I’m completely unknown in this space, also Vegas and Texas is far away (I’m in the EU) :-).

Maybe online? I’ll check that out, thank you for the good idea!

Cheers

Try looking to see if there are any local defcon, 2600, or BSides groups near where you’re at. That might give you a good start.

While the pirate in me says “hell yeah!”, the system administrator in me says “Fuuuuuuuck”. I was once part of an IRC network, and one of the biggest issue we had was with Brazilians who would break our rules and get banned. Just a minute or two later, they were back. It got so bad that we just said “Fuck it. We’re banning all of Brazil.” Not an ideal solution, but it beats spending our time chasing the majority offenders. It’s the 80/20 rule, where 80% of your problems are caused by 20% of your users.

Now let’s pretend somebody builds their new app around this new tech. I love the concept, but how do you keep order? How do you ensure people follow the rules? The only thing keeping users in line would be the fear of losing their “brand” (their username, their reputation). If the new app is something like a chatroom, there’s no “brand” to be had, and you can simply use a new name. It would, obviosly be very different if the app were based around file hosting like Google Drive, because you don’t want to lose your files, but anything with low retention will likely be rife with misconduct due to anonymity.

On the other hand, it would allow for a completely open internet, that no single government can shut down, which we’re seeing happening more and more, with China, Iran, Russia, and Myanmar all shutting down the Internet, or portions of it, when those in power feel there’s a threat to the status quo.

One possibility is to allow users to join a controlled allowlist (or a blocklist, though that runs more into that problem), where some actor acts as a trust authority (which the user picks). This keeps the P2P model while still allowing for large networks since every individual doesn’t have to be a “server admin”. A user could also pick several trust authorities.

Essentially, the network would act as a framework for “centralized” groups, while identity remains completely its own.

The only thing keeping users in line would be the fear of losing their “brand”

This is solving a non-problem. Yeah stupid script kiddies and trolls might care but that is noise easily blocked. The actual people causing harm, committing massive crimes that flood the system with government, or causing massive DoS attacks, none of them care or even want to have something they could lose on a system like this. Its better to be anonymous and not have a brand.

Look at what happens here in the Fediverse. People take time to exclude the havens of the problematic and that resolves enough of the issue to make the services work. But that means that someone is making decisions, and that someone can be targeted to take down a site or to not defederate even when the community thinks its best. There is still a human involved that can be bought or beaten.

The only way to make a system where people follow the rules is to make a place where people dont care to break them. Rules give those who follow them justification for punishing those who don’t. They don’t actually stop people from breaking them.

I think if this system can be hardened against attacks and its easy to deal with spam then we all just coexist with the shit that happens in the background we don’t see.

I can’t imagine a successful, open social network based on this. The entire value of social networks is the moderation (in the same way any bar or club keeps certain people out, via rules, signals, or obscurity, and allows likeminded people to relax and socialize).

I love that this project exists for other use cases. And I could see invite-only, small social networks forming. I just don’t think you’d want to build a Twitter or Reddit clone using it.

I mean, people can already use VPNs or whatever to circumvent protocol-level blocks. You prevent that with usernames or email verification or some equivalent, and there’s no reason you wouldn’t just keep doing that in these new apps.

True. Regardless of nationality, background, or interests, moderation will always be a “problem” in these platforms. Sadly the same tool that can target these obvious spammers can be used to silence honest minorities, and the boundary between these groups is also not set in stone.

I believe financial consequences can be very useful to make it expensive to spam or be abusive.

For example, for a user to access an app:

- The user is required to put up X amount of money as colatoral

- The user can retrieve the funds if they choose to discontinue use of the app

- If a user is reported for abuse, a small fine is deducted from their colatoral

The user Reputation and distribution of fines:

- if a user, has multiple accounts in good standing, the initial collateral to access new apps is discounted for good reputation.

- The proceeds from fines can be distributed to the app’s treasury or to users with good rep.

That’s a very interesting approach, and may work for very specific applications. It seems unlikely most platforms or apps would adopt such an approach since it would be a very high burden to overcome for users to sign up, which is what let’s an app or platform grow. People are rather attached to money, but there’s also the other side of this. A user may not care about the fine and continue to break the rules as “the cost of doing business” because they’re wealthy.

It’s definitely going to be a technology that drives anonymity, and everything that comes with it, for better or worse. I can see a lot of good this can do, but also a lot of crime it can facilitate.

I agree. There is a potential barrier to entry, and growth. I argue:

- people part with money for a cause or belief. Culturally privacy apps are different, inconvenient and unfamiliar UX, there are usually no ‘email signups’, not run by ads, or sales of data, and the software is free but has a learning curve. People do it anyways because they believe it is right

- Its not unusual to pay $1-$15 for an app in a mobile app store. At least they can get their money back (it’s actually free to use)

- users can be compensated for ‘rich’ abusive actor, at the same time incentivised to report in the case of ie chat app

- A sponsor couls risk their collatoral to allow access to a user who cannot manage the initial financial barrier

The first point is the most important IMHO, privacy users accept the learning curve and inconvenience because they believe privacy is more important and because of this, I believe the burden is not as high as we think, that a ‘free to play’ alternative means of accessing privacy respecting apps (by this idea or something else) is as as essential to supporting and protecting privacy as E2EE vs server side encryption.

Hey, that were exavyomy thoughts after reading it. Let’s speak more clear.

A group use the protocol to setup a Plattform to distribute illegal stuff, child porn, drug marketplace, Warez…

Sure it might be the “bad protocol” I news and people / industry is trying to blame and block it. But because of the decentralized structuring like the onion net it is hard to.

Another aspect which should be thought about is government in repressive countries. Iran, North Korea, China, Russia… Name it.

You could block the protocol but how could it be by design setup to make it actually hard for NGFW to just recognize the package by header and filter it. Didn’t check in detail but hope the implement some mechanics to make this hard.

So, if I get it right, it’s basically a TOR network where every user is both an entry node, exit node and middle nodes, so the more users you get, the more private it is.

However, wouldn’t this also mean that just by using any of the apps, you are basically running an exit node - and now have to deal with everything that makes running a TOR exit node really dangerous and can get you into serious trouble, swatted or even ending up in jail?

From a quick google search, jail sentences for people operating TOR exit nodes are not as common as I though, but it still can mean that you will have to explain at a court why was your computer trasmitting highly illegal data to someone they caught. And courts are expensive, they will take all of your electronics and it’s generally a really risky endeavor.

Cause even in tor it eventually leaves the network.

This data could only be known that it came from your direction, but also known it wasn’t you transmitting it. Anything beyond that is just a sea - it’d be like arresting a truck driver for unknowingly moving drugs buried in stuffed animals.

They’d essentially have to by hand arrest every single node that participated to the source - assuming that chain was never broken along the way - to get anything reasonable out of an arrest.

Most importantly this is all public knowledge, so after a couple essentially useless arrests attempts for unknowingly hosting encrypted data, I believe they’d have to back off

They’d essentially have to by hand arrest every single node that participated to the source

I may be wrong on this, but I think that’s exactly the risk associated with hosting TOR Exit nodes.

If they bust a darknet server, for example one hosting child pornography, they sometimes end up with logs of every IP that was accessing the said node. IP of every exit node that someone used to route their traffic. And they do investigate, and it will affect your life, even if you are not doing anything illegal - and even that line is pretty blurry in some of the countries.

If that IP is yours, you will get a visit from police. Being accused of anything in regards to child pornography is not a laughing matter. From what I’ve heard, they may take all of your electronics, you will get interogated and you have to prove beyond doubt that you did not know that someone is using your computer - the exit node - for such activites. In some countries, merely enabling someone to distribute or access child porn - which is exactly what an exit node is doing - is illegal. And while TOR has been in the public knowledge for pretty long time, you may get a judge who has never heard about TOR and has to research it for your case. And in addition to that, you are now literally investigated of distributing child porn. If someone finds that out, it will ruin your reputation and history has shown that being accused of something is enough for many people, no matter the result. Good luck explaining to your grandmother how does TOR work, or to HR at your company why you are being investigated for child porn distribution or why they confiscated your company laptop.

That’s why there is so many warnings on never using your home IP for exit nodes - and that’s exactly what would happen in Veilid.

In general, running an exit node from your home Internet connection is not recommended, unless you are prepared for increased attention to your home. In the USA, there have been no equipment seizures due to Tor exits, but there have been phone calls and visits. In other countries, people have had all their home computing equipment seized for running an exit from their home internet connection.

So, it esentially boils down to who is handling the investigation of your case. The police can either accept that it’s an exit node and a waste of time and leave you alone, or they can make your life a living hell if they choose to.

what you are missing here is that they have to be able to prove that there’s illegal data going through your computer in the first place

If it’s the exit node for the illegal stuff, then would that point to you?

Veilid doesn’t have exit nodes

That’s how I read it too. More like a fully encrypted anonymized trackerless BitTorrent client (or even more like Hotline (a pair of sort of FTP/chat/bbs client and server apps) for the older pirates in the audience.

From how I understand it, in Veilid everyone is both and entry, relay and an exit node - there’s no distinction. Because you have to have exit nodes - the communication has to go though somewhere, so the receiving server will always know the IP of the last node (the exit one). It just has to go through somewhere. The whole main point of TOR (and Veiled, which seems based on the same thing) is that since you go through three nodes, each node can tell where is the request coming from, and where to send it. So the server doesn’t know where did the request came from, but knows the IP of the exit node.

The issue is that if they bust someone for doing illegal shit, your IP may be investigated. They don’t know what communication came from you, but something may have, since just by using veiled, you become an exit node. Or I’m misunderstanding it, but that’s what I understood from the description.

An exit node lets people inside the netwrok accesss clearweb website outside the netwrok. Veilid can’t do that. It’s for communicating inside the network only.

So far (to me) it looks like it doesn’t have the risks and compromises of TOR, and used right it can make the internet impossible to censor.

That sounds really interesting

deleted by creator

Yeah, we are in the minority…

A lot of devs are in it because it is a decent paying job. But they don’t really live and breath this stuff. And they certainly don’t share our philosophy.

Could you elaborate on the spyware in Thunder? I use it somewhat frequently and have never heard about this.

deleted by creator

Just in time for lemmychat :D

I thought I recognized that name: Cult of the Dead Cow. They created Back Orifice which was a great parody of MS’s Back Office.

(Learning how to do url links here…sorry if that doesn’t work)

They created Back Orifice which was a great parody of MS’s Back Office.

Man, I had a lot of fun with that and Sub7 back in the day.

I mean, hypothetically speaking depending on statute of limitations.

I’m hyped AF, can’t wait till the documentation is a little more mature

Imagine BitTorrent where you don’t know the seeder’s IP address.

Google I2P

Holy cow, haven’t been to that bbs in like 30 years, awesome to see 👍🏽

I used to collect the Cult of The Dead Cow text files. Hacking, phreaking, and weird stories. Looks like someone gathered bunch of them here.

Same… Pleasantly surprised to learn that they’re still around

Fun fact, textfiles is Jason Scott’s site. He’s the head archivist at internet archive. Always doing cool stuff if youre bored and have some time to blow this morning.

How is this different from i2p?

Good question. Perhaps someone over at https://i2pforum.net/ knows?

I wanted to have a look at the demo VeilidChat but it seems to be gone. Anyone has a working link?

I didn’t want to be the upvoter that broke the 666 total, but then I remembered that this isn’t that other site and: fuck yeah!

Commenting this at all is exactly what happened on “that other site”